Climate projections continue to be marred by large uncertainties, which originate in processes that need to be parameterized in models, such as clouds, turbulence, and ecosystems. But breakthroughs in the accuracy of climate projections are finally within reach. New tools from data assimilation and machine learning make it possible to integrate global observations and local high-resolution simulations in an Earth system model (ESM) that systematically learns from both. Scientific, computational, and mathematical challenges need to be confronted to realize such an ESM, for example, developing parameterizations suitable for automated learning, and learning algorithms suitable for ESMs. While these challenges are substantial, building an ESM that learns automatically from diverse data sources is achievable now. Such an ESM offers the key opportunity for dramatic improvements in the accuracy of climate projections.

Atmospheric CO2 concentrations now exceed 400 ppm, a level last seen more than 3 million years ago, when Earth was 2–3°C warmer and sea level was 10–20 m higher than today. Even with reduced CO2 emissions, Earth will continue to warm, and sea level will continue to rise, but by how much is unclear. Projections of how climate will change are performed with global climate models, which calculate the motions and thermodynamics of air and ocean waters and interactions among other components of the Earth system on a global computational grid. But despite the significant computational effort expended, climate change projections remain highly uncertain. Some models predict the 2°C warming threshold of the 2015 Paris Agreement will be crossed in the 2030s, irrespective of emission scenarios; others project that the 2°C threshold will not be reached before the 2060s, even under high-emission scenarios. Between these extremes lie vastly different socioeconomic costs, risks of disasters, and optimal mitigation and adaptation strategies. The large uncertainties in global-mean temperature projections percolate into local climate impact projections, because the frequency and intensity of extreme rainfall, regional droughts, or tropical cyclones change with the global-mean temperature (Seneviratne et al. 2016).

The uncertainties in climate projections originate in the representation of processes such as clouds and turbulence that are not resolvable on the computational grid of global models. Parameterization schemes relate such subgrid-scale (SGS) processes semi-empirically to resolved variables such as temperature and humidity on the computational grid. Parameterization schemes are typically developed independently of the global model into which they are eventually incorporated and are tested in detail with observations from field studies at a few locations. For processes such as boundary layer turbulence that are computable in limited areas if sufficiently high resolution is available, parameterization schemes are increasingly also tested with data generated computationally in local process studies with high-resolution models (e.g., large-eddy simulations), likewise at only a few locations. After the parameterizations are developed and incorporated into a climate model or ESM, modelers manually adjust (“tune”) parameters to satisfy large-scale constraints, such as a closed energy balance at the top of the atmosphere (Hourdin et al. 2017, Schmidt et al. 2017). This manual model tuning process uses only a fraction of the available data, and it does so inefficiently, leading to inconsistent tuning and compensating errors among tuned model components. As a result, large uncertainties in parameterization schemes remain.

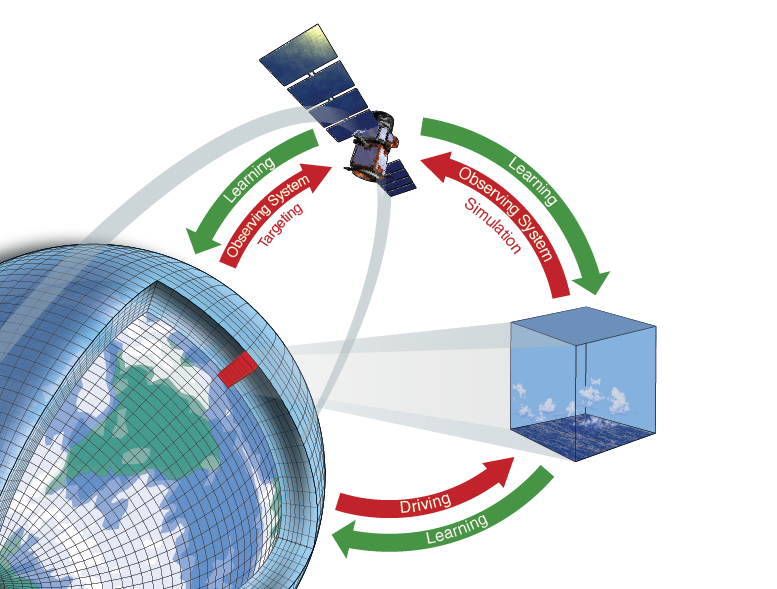

As a step forward, we have proposed a blueprint for an ESM with SGS process models that learn automatically from two sources of information (see schematic above):

- Global observations. We live in the golden age of Earth observations from space. A suite of satellites is streaming coordinated and nearly simultaneous measurements of variables such as temperature, humidity, clouds, ocean surface currents, sea ice cover, and biogeochemical variables, with global coverage for more than a decade. Although such data are used in model evaluation, only a minute fraction of the data (mostly large-scale energy fluxes) has been directly used in ESM development. ESMs can learn directly from such space-based global data, augmented and validated with more detailed local observations where available.

- Local high-resolution simulations. Some SGS processes in ESMs are in principle computable, only the globally achievable resolution precludes their explicit computation. For example, the turbulent dynamics of clouds can be computed with high fidelity in limited domains in large-eddy simulations (LES) with mesh sizes of meters to tens of meters. Increased computational performance has made LES domain widths of 10–100 km feasible in recent years, while the horizontal mesh size in climate models has shrunk, to the point that the two scales have converged. Thus, while global LES that reliably resolve low clouds will not be feasible for decades, it is now possible to nest LES in limited areas of atmosphere models and conduct targeted local high-fidelity simulations of cloud dynamics in them. Local high-resolution simulations of ocean mesoscale turbulence or sea ice dynamics can be conducted similarly. ESMs can also learn from such nested high-resolution simulations.

Simultaneously exploiting global observations and local high-resolution simulations with the data assimilation and machine learning tools that have recently become available presents the key opportunity for dramatic progress in Earth system modeling. Replacing the manual tuning process and the offline fitting of parameterization schemes to data from few locations, the ESM we envision will autotune itself and quantify its uncertainties based on statistics of all available data. It will adapt as new observations come online, and it will generate targeted high-resolution simulations on the fly—akin to targeted observations in weather forecasting (Palmer et al. 1998, Lorenz and Emanuel 1998). Targeted high-resolution simulations will be used to reduce and quantify uncertainties where needed to tighten estimates of SGS models of computable processes and to guard against overfitting to the present climate.

We propose that the automated learning from observations and high-resolution simulations will use statistics accumulated in time (e.g., over seasons) to:

- Minimize model biases, especially biases that are known to correlate with the climate response of models. This amounts to minimizing mismatches between time averages of ESM-simulated quantities and data.

- Minimize model-data mismatches in higher-order Earth system statistics. This includes covariances such as cloud-cover/surface temperature covariances, or ecosystem carbon uptake/surface temperature covariances, which are known to correlate with the climate response of models (“emergent constraints” such as this). It can also include higher-order statistics involving direct targets for prediction goals, such as rainfall extremes.

Optimizing how well an ESM simulates climate statistics targets success metrics that are directly relevant for climate projections, and it avoids difficulties caused by sensitive dependences on atmospheric initial conditions and small-scale roughness that otherwise complicate data assimilation at high resolution. However, minimizing model-data mismatches in climate statistics is computationally challenging because accumulating statistics from an ESM is costly: each evaluation of the target statistics requires an ESM simulation at least over a season. But the computational problems are beginning to be tractable, for example, with ensemble-based inversion methods, such as those we tested with a prototype problem in our paper.

A number of hurdles need to be cleared to realize such an automatically learning ESM. For example:

- We need dynamical cores of models that are suitable for spinning off local high-resolution simulations on the fly. This will require the refactoring of existing dynamical cores, or the development of new ones.

- We need SGS process models (e.g., for the atmosphere, oceans, and biosphere) that are suitable for automated learning from diverse data sources. This includes the development of SGS process models that are systematically refine-able when more data become available, and that eliminate correlations among parameters (which are difficult to identify from data) as much as possible.

- We need new algorithms for simultaneously assimilating observations and local high-resolution simulations into ESMs. This will require a significant speed-up of existing algorithms, by minimizing the number of expensive ESM runs that are needed, for example, through filtering methods that successively update model parameters as data become available.

- We need observing system simulators and interfaces between them and an ESM to assimilate observations.

These are substantial challenges. But they can be overcome. Together with several students and postdocs, Andrew Stuart in Caltech’s Department of Computing and Mathematical Sciences and I have begun a research program addressing some of these challenges. Now we are looking for graduate students, postdocs, research scientists, and software engineers to join us. With support from Charles Trimble and the Heising-Simons Foundation, we are convening a series of workshops to identify the key opportunities and challenges in data-informed modeling of the Earth system. And with colleagues at MIT and the Naval Postgraduate School we have begun to scale up and broaden this effort into a larger initiative that has as its objective the development of an automatically learning ESM—with the ultimate goal of delivering climate projections with substantially reduced and quantified uncertainties.

November 6, 2018: Reposted with updates from climate-dynamics.org.