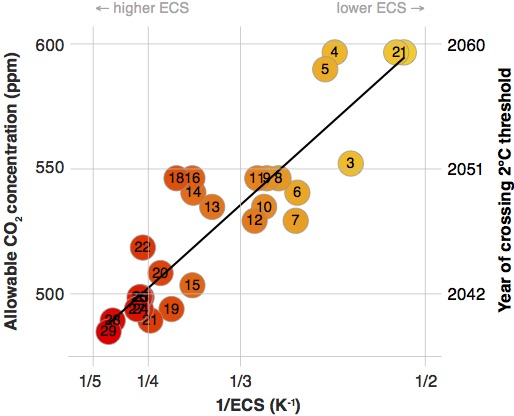

How low clouds respond to warming remains the greatest source of uncertainty in climate projections. Climate models projecting that much less sunlight will be reflected by low clouds when the climate warms indicate that CO2 concentrations can only reach 470 ppm before the 2℃ warming threshold of the Paris agreement is crossed—a CO2 concentration that will probably be reached in the 2030s. By contrast, models projecting a weak decrease or increase in low-cloud reflection indicate that CO2 concentrations may reach almost 600 ppm before the Paris threshold is crossed. In a new paper, we outline how new computational and observational tools enable us to reduce these vast uncertainties.

The equilibrium climate sensitivity (ECS) is a convenient yardstick to measure how sensitively the climate system responds to perturbations in the atmospheric concentration of greenhouse gases such as CO2. It measures the eventual global-mean surface warming in response to a sustained doubling of CO2 concentrations. Although it is a long-run measure and does not depend, for example, on transient effects such as ocean heat uptake, differences among climate models’ ECS turn out to be good indicators of the models response to CO2 increases over the coming decades. For example, if one asks how high the CO2 concentration can rise in a climate model before the surface has warmed 2℃ above pre-industrial temperatures—the warming threshold that countries pledged to avoid in the Paris Agreement—the answer depends strongly on the model’s ECS. In models with high ECS, the allowable CO2 concentration is lower, as low as 470 ppm in the models with the highest ECS; in models with low ECS, the allowable CO2 concentration is higher, reaching up to 600 ppm (Figure 1, left axis). Translated into time by assuming emissions continue to rise rapidly, 470 ppm will be reached in the 2030s, whereas 600 ppm will only be reached around 2060. This is a difference of a human generation, entirely attributable to uncertainties in physical aspects of climate models.

The bulk of the large spread in ECS across current climate models (the wide horizontal axis in Figure 1) arises because it is uncertain how low clouds respond to warming (see this blog post for a discussion). If low-cloud cover increases as the climate warms, the warming is muted by the additional reflection of sunlight. If low-cloud cover decreases, the warming is amplified. Therefore, the allowable CO2 concentration also correlates with the strength of the low-cloud feedback in climate models (see our paper for a figure). Currently available evidence points to a decrease of low-cloud cover as the climate warms, implying an amplifying feedback of low clouds on warming. It is likely that the true ECS lies in the upper half of the distribution of ECS across models, implying that meeting the Paris target may be challenging.

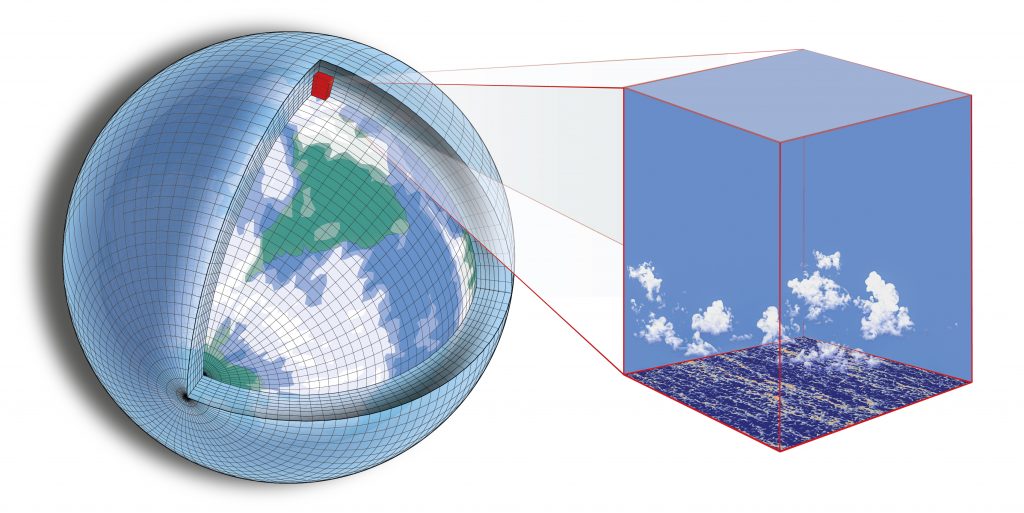

The response of low clouds to warming is uncertain because the dynamics governing low clouds occur on scales of tens of meters, whereas climate models have horizontal grid spacings of 50–100 km (see the sketch at the top). Climate models cannot resolve low clouds explicitly. Even under optimistic assumptions about computer performance continuing to increase exponentially, we estimate that climate models resolving low clouds globally will not be available before the 2060s. By then, Earth’s climate system will have revealed its true sensitivity in the experiment we are currently performing on it. For the foreseeable future, we will need to parameterize low clouds in climate models, that is, we need to relate their subgrid-scale dynamics to the resolved grid-scale dynamics of the climate model.

While computational advances alone will not bring about a resolution of the low-cloud problem soon, recent computational advances, paired with the availability of unprecedented observational data, do enable new approaches to the parameterization problem. We cannot simulate low clouds globally, but we can simulate them faithfully in limited domains, with large-eddy simulations (LES). LES of clouds are now feasible in domains the size of a climate model grid box, creating fresh opportunities for parameterization development. For example:

- Embedding an LES in each grid column of a global climate model is computationally feasible if the LES domains sample a small fraction of the footprint of each grid column. Such a multiscale modeling approach linking LES and climate models is beginning to enable novel global simulations of low clouds and their response to climate changes (Grabowski et al. 2016).

- Alternatively, LES that fully resolve a climate model grid column can be embedded in a small subset of grid columns, similar to the LES described here. Systematic numerical experimentation in such supercolumns can anchor the development and validation of new approaches to parameterizing clouds and the planetary boundary layer. It is the best surrogate we have for systematic experimentation with the real climate system.

In either case, LES can also be driven by weather hindcasts, and the simulation results can be evaluated against the wealth of observations that are now available, observations both from space and from the ground. The unprecedented availability of observations paired with the possibility of creating a computational ground-truth of cloud dynamics with LES should allow us to develop well-constrained parameterizations. Any such parameterization will contain closure parameters such as entrainment rates, which are notoriously difficult to constrain. While a definite “theory” for such parameters (latent variables in the language of statistics) may remain elusive, it may be possible to estimate them using machine learning approaches. Machine learning approaches could exploit the available observational data and our capacity to generate a computational ground truth in supercolumns to find empirical relations between closure parameters and the statistics of flow variables resolved on the grid scale.

More broadly, the time is ripe to develop “learning climate models,” which from the outset incorporate the capacity to learn closure parameters (latent variables) from observations or from supercolumn simulations that are conducted as needed to constrain uncertain processes. This requires a fundamental re-engineering of climate models. However, such a re-engineering will soon become a necessity in any case if climate models are to effectively exploit advances in high-performance computing such as many-core computational architectures based on graphical processing units (Bretherton et al. 2012, Schalkwijk et al. 2015, Schulthess 2015). So opportunities abound, and climate modeling is primed for advances.

November 6, 2018: Reposted from climate-dynamics.org