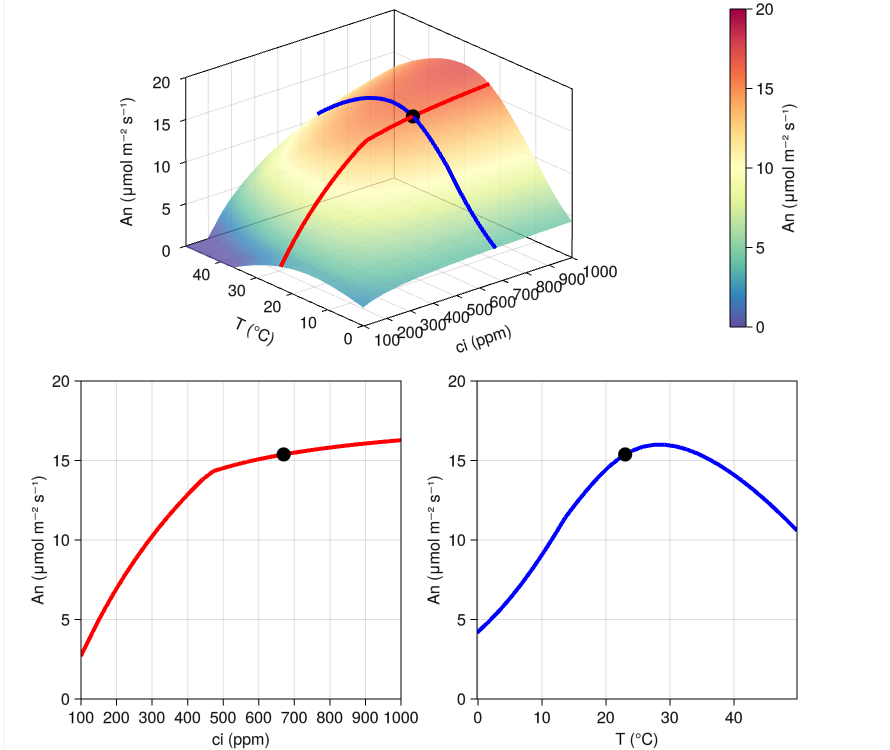

By Simone Silvestri, Gregory Wagner, and Raffaele Ferrari, for the MIT CliMA group. Ocean eddies—the ocean equivalent of atmospheric cyclones and anticyclones—play a key role in the Earth’s climate system. However, they are not simulated by climate models due to their small scale, between 10 and 100 km, which is below the resolution of standard ocean models. To approximate the climate impact of the missing eddies, modelers employ parameterizations—empirical equations that estimate the collective effect of eddies given resolved model variables such as ocean current strength, temperature, and salinity. Yet, this approach is fraught with uncertainties. For example, a 2002…

Read More

Blog

Cloud microphysics refers to the complex processes that govern the formation, evolution, and interactions of particles within clouds. These processes significantly influence the Earth’s climate system by regulating precipitation patterns and cloud cover. Understanding the intricacies of cloud microphysics is therefore essential for accurate climate modeling. Yet, the precise modeling of these complex microphysical processes remains one of the most challenging aspects of climate research. Traditional cloud microphysics modeling within climate models, known as bulk methods, aims to simplify the physics governing the vast range of processes occurring within clouds. While these methods have enabled more efficient climate simulations, their…

Read More

by Alexandre A. Renchon, Katherine Deck, Renato Braghiere: In the realm of scientific advancement, enhancing Earth System Models (ESMs) stands out as a paramount objective. Presently, however, these models remain enigmatic enclaves for many researchers, akin to inscrutable black boxes. The labyrinthine nature of ESMs, coupled with their high computational demands, usage of esoteric programming languages, and the absence of lucid documentation and user interfaces, contribute to this opacity. To surmount these obstacles, CliMA is creating a new era of accessible ESM features for the global scientific community: Modernized Programming: Departing from convention, CliMA adopts a contemporary programming language, Julia.…

Read More

by Tobias Bischoff and Katherine Deck Climate simulations play a crucial role in understanding and predicting climate change scenarios. However, the spatial resolution that simulations can be carried out with is often limited by computational resources to around ~50-250 km in the horizontal. This leads to a lack of high-resolution detail; moreover, since small-scale dynamical processes can influence behavior on larger scales, coarse resolution simulations can additionally be biased compared to a high-resolution “truth”. For example, simulations run at coarse resolutions fail to accurately capture important phenomena such as convective precipitation, tropical cyclone dynamics, and local effects from topography and…

Read More

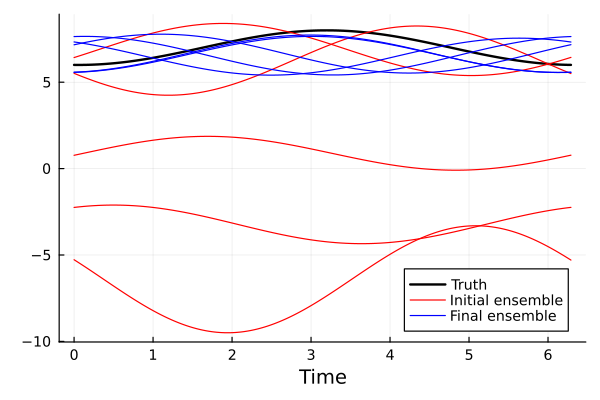

By Eviatar Bach and Oliver Dunbar To understand this blog post, you will need some basic familiarity with probability (Bayes’ theorem, covariance) and multivariate calculus. In climate modeling, small-scale processes that cannot be resolved, such as convection and cloud physics, are represented using parameterizations (see two previous blog posts here and here). The parameterizations depend on uncertain parameters, which leads to uncertainty in simulations of future climates. At CliMA, we use observations of the current climate, as well as high-resolution simulations, to estimate these parameters. The learning problem is challenging, as the parameterized processes typically are not directly observable, and…

Read More

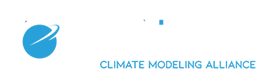

by Yujie Wang and Renato Braghiere: Climate model predictions of future land carbon sink strength show significant discrepancies. To enhance predictive accuracy and reduce inter-model disagreements, it is crucial to improve the representation of vegetation processes and calibrate the models using more observational data. However, the limitations of computational resources in the past have hindered the integration of new theories and advances into traditional climate models, which often rely on statistical models to parameterize vegetation processes instead of mechanistic and physiological models (such as stomatal control models). Additionally, the preference for faster models has limited the incorporation of complex features (e.g.,…

Read More

[latexpage] By Ignacio Lopez-Gomez Over the past few years, machine learning has become a fundamental component of some newly developed Earth system parameterizations. These parameterizations offer the potential to improve the representation of biological, chemical, and physical processes by learning better approximations derived from data. Parameters within data-driven representations are learned using some kind of algorithm, in a process referred to as model training or calibration. Gradient-based supervised learning is the dominant training method for such parameterizations, mainly due to the availability of efficient and easy-to-use open source implementations with extensive user bases (e.g., scikit-learn, TensorFlow, PyTorch). However, these learning…

Read More

Weather disasters are extremely damaging to humans (e.g., severe storms, heat waves, and flooding), our livelihoods (e.g., drought and wildfire), and to the environment (e.g., coral bleaching via marine heatwaves). Although heavy storms, severe drought, and prolonged heat waves are rare, they account for the majority of the resulting negative impacts. For individuals, governments, and businesses to be able to best prepare for these events, their frequency and severity need to be quantified accurately. A major challenge, however, is that we need to form our estimates using a limited amount of historical data and simulations, where extreme events appear rarely.…

Read More

Researchers are spending way too much time finding, reading, and processing public data. The ever increasing amount of data, various data formats, and different data layouts are increasing the time spent on handling data—before getting ready for scientific analysis. While the intention of sharing data is to facilitate their broad use and promote research, the increasing fragmentation makes it harder to find and access the data. Taking my personal experience as an example, I spent months to identify, download, and standardize the global datasets we use with the CliMA Land model, which came in a plethora of formats (e.g., NetCDF,…

Read More

Climate models depend on dynamics across a huge range of spatial and temporal scales. Resolving all scales that matter for climate–from the scales of cloud droplets to planetary circulations–will be impossible for the foreseeable future. Therefore, it remains critical to link what is unresolvable to variables resolved on the model grid scale. Parameterization schemes are a tool to bridge such scales; they provide simplified representations of the smallest scales by introducing new empirical parameters. An important source of uncertainty in climate projections comes from uncertainty about these parameters, in addition to uncertainties about the structure of the parameterization schemes themselves.…

Read More