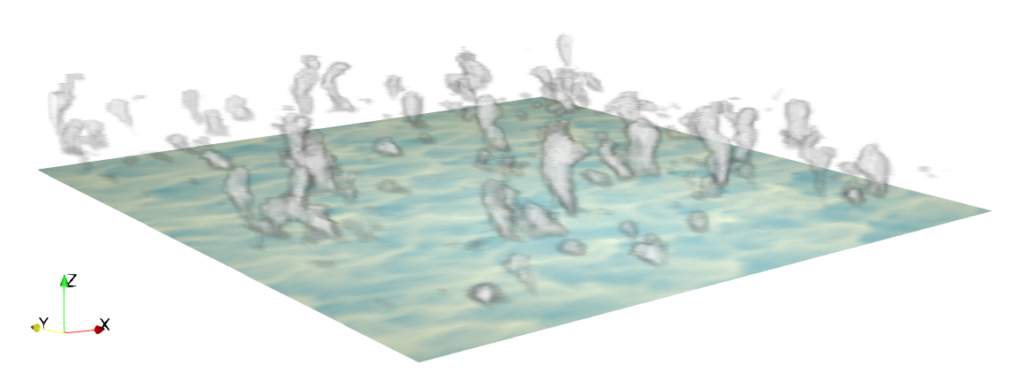

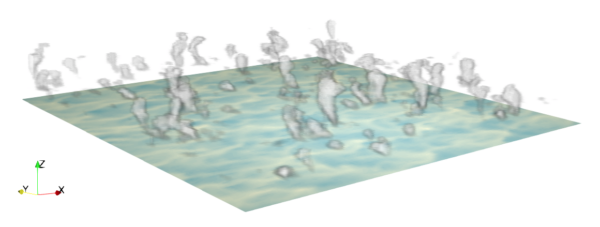

Climate models rely on parameterizations of physical processes whose direct numerical simulation (DNS) is infeasible because of its enormous computational cost. The accurate representation of unresolved processes below the grid scale of global climate models (GCMs), such as atmospheric turbulence and convection, is important for our ability to predict and understand climate. Large-eddy simulation (LES) frameworks are designed to allow studies of processes that are subgrid-scale in GCMs by examining smaller sections of the globe in greater detail. Processes whose relevant length-scales are smaller than the grid-scales in GCMs (typically tens to hundreds of kilometers) can be examined in greater detail in LES with grid resolutions of meters to tens of meters (Figure 1). With the goal of developing an accessible, performant high-resolution model of atmospheric phenomena, the CliMA team has developed a new large-eddy simulation (LES) framework called the ClimateMachine.

ClimateMachine is an open-source, Julia language package that facilitates model prototyping and research with the same underlying code on both CPU and GPU hardware. One of the core components of this framework is a partial differential equation solver using the discontinuous Galerkin (DG) method for the governing conservation equations for mass, momentum, energy, and total water content of air. Essentially, any limited-area on the globe is sub-divided into a series of elements, with flow variables in each such element represented by a polynomial approximation to the true fluid system. The exchange of these quantities across elements is then modeled through numerical fluxes. Properties of the DG discretization facilitate parallel computation with relative ease, as overlap between computation and communication loads are naturally achieved by splitting the physical terms into those that can be computed locally within elements (volume integrals), and those that require information exchange across neighboring elements (surface integrals).

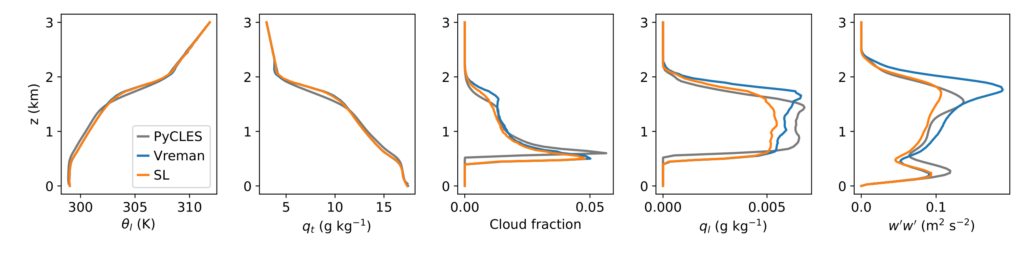

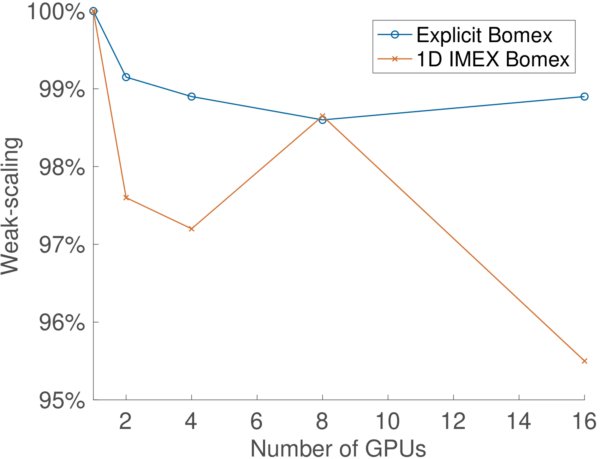

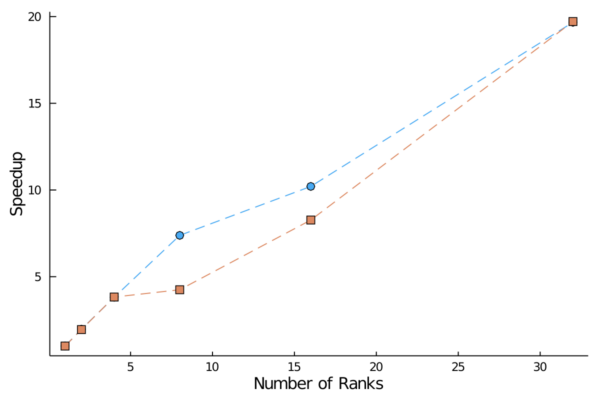

In our GMD pre-print, we demonstrate the utility and fidelity of the ClimateMachine LES through a series of benchmark problems, including that of atmospheric turbulence and shallow cumulus convection. By design, ClimateMachine supports modules that permit physical models to be added or replaced with relative ease, facilitating comparative studies of flow physics. For example, we demonstrate the use of Julia’s multiple-dispatch paradigm by applying different models to represent the energy cascade in small scale flow turbulence (Figure 2). The framework supports multiple options for the numerical time integration. We also examine the scaling characteristics of this LES framework, noting excellent weak scaling on up to 16 GPUs on the Google Cloud Platform (Figure 3), with greater than 95% weak scaling for the BOMEX shallow-cumulus convection test problem. We also note a factor 19.7 speedup when considering CPU strong scaling over a 32 rank simulation (Figure 4).

The ClimateMachine LES allows us to generate high-resolution datasets with which parameterizations in GCMs can be improved and trained using data assimilation and machine learning methods. Reduced uncertainties in parameterizations are critical for our goal of improved climate predictions, to provide accurate and actionable information for climate change mitigation and adaptation strategies. The ClimateMachine LES framework is a step toward our longer-term goal of providing seamless modeling framework that can go from coarser-resolution GCMs to higher-resolution LES, can exploit modern computing hardware including accelerators, and uses data assimilation and machine learning methods to inform a unified “virtual-earth” model. The team’s current efforts include the development of a new Julia dynamical core, ClimaCore, and several physics modules to capture phenomena critical to our understanding of current and future climate states.